What is Kubernetes? – A dive into K8s and beyond

Table of Contents

Share this article:

Kubernetes is an open-source platform widely used in the industry to automate the deployment, scaling, and management of containerized applications. A recent survey by EdgeDelta revealed that more than 60% of organizations have already adopted Kubernetes, and adoption rates have increased to 96% in 2024. Over 50,000 organizations and 5.6 Million developers are using Kubernetes globally. These status show that Kubernetes has become the de facto standard for orchestrating containers, particularly in cloud environments. Kubernetes is most beneficial when organizations have microservice architecture, and they want to orchestrate all the containers to scale efficiently.

Kubernetes provides a ton of business value for organizations as it helps scale and run applications efficiently. According to a survey by SpectroCloud, nearly 70% of organizations have matured their use of Kubernetes, and many organizations continue to adopt Kubernetes and work towards maturity. However, adopting Kubernetes does come with its own set of challenges.

By using Kubernetes, teams become cloud agnostic i.e, they can run their workloads a public or private cloud, on-premises, or in hybrid configurations. This makes Kubernetes an ideal tool for deploying workloads that can easily run and be ported to different environments.

Kubernetes Architecture

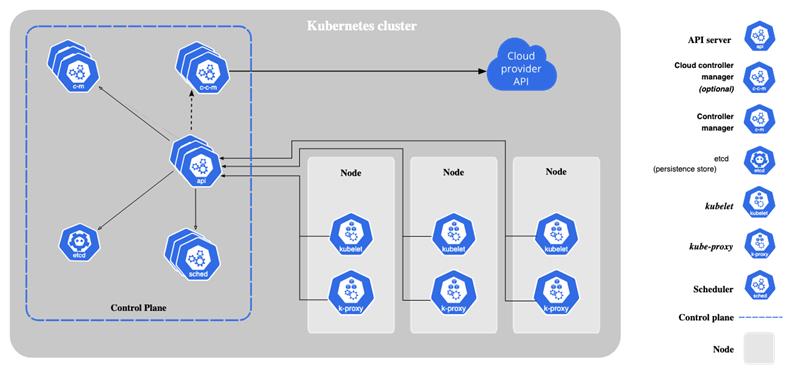

When you create a Kubernetes cluster, it has many components that work together for orchestrating containers. Due to the highly distributed nature of Kubernetes, it also has a lot of different complexities. The image below shows the Kubernetes architecture at a high level.

Let’s take a quick look at the different components of Kubernetes:

Kubernetes Nodes

A Kubernetes Node is an individual machine where the workloads can run. It is essentially a unique computer or virtual machine itself. Multiple such nodes are connected together to form a Kubernetes cluster. As per Kubernetes v1.31, a total of 5,000 nodes are supported per cluster.

A Kubernetes cluster has two main types of nodes. The control plane node houses all the critical Kubernetes components that are crucial for orchestrating workload. The worker nodes on the other hand are where application workloads are deployed. To learn about Kubernetes nodes in greater depth, please check out our dedicated article on Kubernetes Nodes.

Kubernetes API Server

The Kubernetes API server acts as the entry point for the Kubernetes Cluster. Whenever any external request is made to the cluster, or by any of the other control plane components, the request first goes to the API server. The API server authenticates and authorizes the request, and then sends the request to the relevant K8s components.

Kubernetes ETCD

The ETCD is a distributed key-value database that is designed to specifically work with Kubernetes. It stores information about the state of the cluster such as resource utilization, creation or deletion of any Kubernetes objects, the status of the objects, etc.

The API Server is the only Kubernetes component that can directly interact with the ETCD. If any other components wants to read or update the data of the ETCD, they cannot do it directly. First, the component will have to talk to the API Server and the API server will make the necessary changes in the ETCD.

Kubernetes Scheduler

In Kubernetes, containers are run within a unit called a pod. A pod is simply a wrapper around one or multiple different containers. Whenever you deploy any kind of workload, the application always runs inside a pod.

When the request is sent to the API Server to run a workload, a particular node needs to be selected where the pod should run. Filtering the available nodes, and selecting the correct node to schedule the pod to, is the job of the kube-scheduler. You can either define some rules for how the pod should be scheduled, or you can leave it up to the scheduler to select the best available node for running the pod upon.

This article covers all the different Kubernetes Scheduling concepts and how to use them.

Kubernetes Controller Manager

Kubernetes has several different control loops that are responsible for observing, managing, and taking appropriate actions when a particular event occurs. These control loops are called controllers in Kubernetes. Multiple controllers exist in Kubernetes that manage different aspects of the cluster.

For example, there is a Deployment Controller, Replication Controller, Endpoint Controller, Namespace controller, and Service Account controller. Each one of these controllers is responsible for managing a different component of Kubernetes.

Kubelet

The kubelet is the cluster builder. Earlier in this blog we already learned that every single application in the cluster runs inside a pod. There has to be a component for building and running the pods and the containers inside the pod. The Kubelet is responsible for creating the pods with the correct configuration.

Kube Proxy

After you set up all the other components of a cluster, there has to be a way for all of the components to communicate with each other. The kube-proxy is the component that ensures that every Kubernetes resource can communicate with the other resources.

The Kube-Proxy can perform simple TCP, UDP, and SCTP stream forwarding or round-robin TCP, UDP, and SCTP forwarding across a set of backends

To learn about the Kubernetes Architecture in-depth, please check out our dedicated blog where we have covered every component of the Kubernetes architecture in detail.

Kubernetes Distributions

Similar to Linux distributions, Kubernetes also has several different flavors. The Kubernetes open-source project was initially created by Google and has been adopted by many different organizations. Each has molded Kubernetes to work in different ways to achieve specific goals. This has led to many Kubernetes distributions, some of which are designed to be lightweight, others that have a greater focus on security, and some that have been stripped down and meant to be used locally as development environments.

Kubernetes is a distributed system which means it can be difficult to manage. To avoid managing the Kubernetes clusters yourself, there are multiple managed Kubernetes distributed and provided by popular cloud providers like AWS, Google Cloud, Azure, Civo, Digital Ocean, and many more.

Apart from the managed Kubernetes options, you also have to option to self-host the Kubernetes cluster on your own infrastructure. Tools such as Kubeadm, KOP, and RKE2 help bootstrap the Kubernetes cluster. One of the key considerations of self-hosting is to ensure that you have the expertise to start, manage, and maintain a Kubernetes cluster. Many organizations lack the fine-grained expertise for managing a cluster and hence prefer using a managed service. This blog will help you decide if using a managed cluster like EKS or a self-hosted option like KOPS is right for you.

When developing applications for Kubernetes, developers tend to use lightweight clusters such as minikube, kin, k3d, or k3s. While K3s is a lightweight version of Kubernetes, many cloud providers use K3s as their Kubernetes offering, as it helps reduce the hosting costs for Kubernetes. Read on how you can use K3s for deploying applications.

Kubernetes Workloads

Kubernetes has several building blocks that are useful for deploying, updating, and managing your applications. These different building blocks are called Kubernetes Workloads. In total, there are 75 resources are shipped with Kubernetes. However, since Kubernetes is extensible, a lot of different custom resources can be added to the cluster using Custom Resource Definitions (CRDs)

Every Kubernetes resource has a certain function. Some workloads might work in combination with several different workloads. Let us look at some of these Kubernetes resources that you will have to use in everyday activities.

Kubernetes Pods

A pod is the smallest deployable Kubernetes unit. Inside a pod, all the applications run as containers. Pods are a wrapper unit around the containers. Inside a pod, there can exist multiple containers. Every container that is inside the pod will share the same network, storage, system resources, and specific configuration on how to run the pods.

Each Kubernetes pod is created through a YAML manifest. This manifest can be created using kubectl apply or kubectl create commands. Whenever you create a pod, it is also important to define the different resource requests and limits in the pod. The resource requests help manage system resources among different pods running on the node.

Kubernetes ReplicaSets

Kubernetes pods are ephemeral workloads which means that they can get deleted at any time due to insufficient system resources, some application error, or any other reason. If a production application is running inside the pod, the application will be lost until the pod is recreated. If you try to manually observe the pod’s status and recreate it when it is destroyed, it can consume a lot of time, and there can be a significant delay between the pod’s deletion and recreation.

To ensure that a pod is running at any given point in time, Kubernetes has a resource called as the ReplicaSet. A ReplicaSet ensures that a pod with a given configuration is running at any given point in time. If the Pod is deleted either manually, or by any system process, the ReplicaSet ensures that the pod is recreated.

Kubernetes Deployments & StatefulSets

In Kubernetes, a Deployment is a way to create ReplicaSets and pods, and also manage rolling out updates in a seamless manner. When you wish to deploy an application in Kubernetes, you will want to use the Deployment object instead of using a ReplicaSet. Deployments also help progressively rollout application updates using deployment strategies.

In a similar way, when you have applications that need to maintain state, you will want to use a StatefulSet. For example, you will want to maintain the state of a database such as MySQL, hence it will be deployed as a StatefulSet. This article highlights the differences between Deployment and StatefulSets.

Kubernetes Services

A Kubernetes service provides a way to expose the application in the pod to the network. By default, every pod has a unique IP, and the containers inside the pod are isolated. They can only communicate with other containers in the same pod. If a container wants to send or receive a request from the external world, it cannot do so.

To expose the pod to the outside world, a service has to be created and mapped to the pod. The service makes the pod accessible to other pods on the same network. Each service has a set of endpoints that are used to access the pods that are mapped to it.

This article covers in-depth information about the various Kubernetes Services.

Kubernetes Namespaces

In Kubernetes, it’s important to segregate groups of workloads into isolated spaces. This is done through a resource called Kubernetes Namespaces. Let’s say that you have deployed a monitoring stack and a network resource and you also have your core applications all running in your cluster.

Namespaces can also be used in advanced ways to serve multiple tenants. This is known as Multi-tenancy.

Kubernetes Jobs

A Kubernetes job is used for running certain tasks within the cluster. A job creates one or more pods within which the actual task that is defined runs. When a specified number of pods is complete, the task or the job is marked as completed. Kubernetes mainly has two types of Jobs

- Job: A job runs a single time and then terminates

- CronJob: A CronJob is a job that runs at specified time intervals

To learn about the different Kubernetes workloads in depth, please check out this blog.

Kubernetes Networking

As we have seen previously, Kubernetes has a lot of different elements and works in a distributed manner. Nodes can be in multiple different physical locations, and they are all connected through the network. Due to this distributed nature, it is important to have robust networking to properly operate a Kubernetes cluster.

Whenever you create a pod, it has an IP Address, but other pods, or external traffic is not able to access the pod. This is because the pod has not been exposed in any way. To expose the pod, you have to create a Kubernetes Service.

There are three main types of Kubernetes services which are

- ClusterIP: Enables pod-to-pod communication

- NodePort: Exposes the pod on a random port between 30,000 to 32,767

- LoadBalancer: Exposes pods to external traffic and load balances the traffic.

To get an in-depth understanding of the different service types, and how to use them, refer to our blog on Kubernetes Services.

Apart from the above services, Kubernetes also has a resource known as Kubernetes Ingress. Ingress is an API object that handles external access to the cluster and provides load-balancing capabilities based on certain rules that you can define. An Ingress can act as a single point of entry for your Kubernetes cluster. Instead of requiring to expose multiple services individually, you can use an Ingress to provide access to those applications.

This article covers Kubernetes Ingress in-depth and walks you through creating an ingress resource.

Kubernetes Storage

By default, pods are ephemeral in nature which means that are designed to be destroyed and recreated. Whenever a pod is destroyed, all the data in the pod is lost as well. Certain types of ephemeral storage can be mounted to a pod, but it is not recommended.

Ephemeral storage is useful for certain use cases, but when you want to persist the data long after the pod is destroyed, ephemeral storage falls short. For this, Kubernetes has a few different resources for persistent storage.

Kubernetes has a resource called Persistent Volumes, which is useful when working with data. These persistent volumes can be a cloud storage resource such as a S3 bucket, or local storage on the Node. In short, Persistent Volumes help retain data even after the lifecycle of the pod is complete.

The persistent volumes are assigned to different pods using Persistent Volume Claims(PVCs). A Persistent Volume(PV) binds to a PVC which is then mounted to a pod.

Oftentimes, you may not have direct access to the storage resources. In this case, Kubernetes has the storage class resource that can help dynamically provision Persistent Volumes.

In order to ensure that all cloud storage can work with Kubernetes, the Container Storage Interface (CSI) was created to standardize the methods of storing data in Kubernetes. Using the various CSI drivers, Kubernetes can support a number of different storage types.

For more information about Kubernetes storage, refer to this article.

While working with any kind of storage, it is important to back up the data in case of any kind of data loss. By default, Kubernetes stores its information in the etcd. However, operating the etcd can be tricky. There are certain tools like Velero which are helpful for backing up Kubernetes clusters

Kubernetes Security

Similar to every computing system, when deploying applications to Kubernetes, you need to ensure that the cluster and the applications are secure. Security becomes complicated in Kubernetes since there are many different components working together. Kubernetes has several built-in mechanisms that can help secure a cluster, as well as external tools that enhance security.

Ensuring overall Kubernetes security starts from the containers. While building containers, it is important to ensure that the containers are secure. This includes using up-to-date dependencies, secure base images, and scanning the container image once it’s built. To learn about the best practices for securing containers, please check out this blog.

Your applications might need to use access keys to authenticate and authorize certain services. Injecting these sensitive keys directly into the container is not a good idea. If the container gets compromised, the credentials will be compromised as well. Kubernetes provides two resources i.e secrets and configmaps that help manage sensitive data and configuration data respectively.

However, one thing to note is that secrets are not secure by default. They encode the secret data is a base64 format. There is no encryption that happens to the secret data. To increase security, DevOps teams prefer using an external secret store such as AWS Secret Manager, Hashicorp Vault or Azure Key Vault.

When you want to share cluster access with different team members, it is generally not a good idea to share the admin level of access to every member. Instead, the level of access should be restricted to just what users require. Kubernetes has a Role Based Access Control(RBAC) mechanism that let’s you fine grain the level of access for each individual user. To learn how to implement RBAC, please refer to this blog.

Extending Kubernetes

The design philosophy of Kubernetes is based on the principles of automation, API-driven, extensible and loose coupling between components. Kubernetes follows a number of different open standards such as CRI, CRO, CNI, and others which make it easy to extend the functionality of Kubernetes. Kubernetes Operators are useful for adding to the functionalities of Kubernetes. There are a number of different tools in the cloud native landscape that are designed to work in unison with Kubernetes. This entire cloud native ecosystem can be leveraged to create a robust Kubernetes cluster that fits business requirements.

When working with Kubernetes at scale, autoscalers can be highly beneficial. They can automatically scale the pods or nodes depending on certain factors such as traffic, time, or any particular event that occurs in the cluster. KEDA is one of the popular autoscalers that are used in Kubernetes.

Similar to autoscaling tools, there are many different tools that can extend the functionality of Kubernetes. Some of these include service meshes, API gateways, Ingress controllers, custom schedulers, and more.

Managing Kubernetes Applications

The whole purpose of deploying workloads onto Kubernetes is to achieve greater scale and reliability. However, as scale increases, so does management complexity. There are a number of different tools that DevOps teams incorporate into their workflow to simplify cluster management. We will look at some of the key tools that are useful for managing clusters.

- K8s Package Managers: A single K8s application can have multiple resources which can be difficult to manage and deploy to multiple environments. For this, teams use tools such as Helm to package and deploy apps across multiple environments.

- GitOps tools: GitOps is an idealogy where your Kubernetes cluster is managed directly through Git repositories. Tools such as ArgoCD and FluxCD are widely used to implement GitOps practices for managing applications.

Kubernetes Dashboard: Managing Kubernetes through just the command line utility can become challenging. In order to get visibility into all workloads, and manage multiple clusters, the team prefers using a dedicated Kubernetes Dashboard.

Conclusion

Kubernetes is a highly sophisticated tool that helps to orchestrate containers in multiple environments. Many industries use Kubernetes for running their applications. Some organizations are running multiple Kubernetes clusters across different regions to managing their workloads.

Kubernetes has a number of different components ranging from the core architectural components to resources essential for running applications. It has a number of resources for managing the network communication between different pods as well as storage resources. Kubernetes is also cloud agnostic which means you can run a Kubernetes cluster in any environment without worrying about getting vendor-locked.

It is also highly extensible which makes it a versatile option that fits almost every business use case. The entire Kubernetes ecosystem makes it a great choice for running and scaling applications in prodcution enviormnets.